Lockdown Results and HHVM Performance

The HHVM team has concluded its first ever open source performance lockdown, and we’re very excited to share the results with you. During our two week lockdown, we’ve made strides optimizing builtin functions, dynamic properties, string concatenation, and the file cache. In addition to improving HHVM, we also looked for places in the open source frameworks where we could contribute patches that would benefit all engines. Our efforts centered around maximizing requests per second (RPS) with Wordpress, Drupal 7, and MediaWiki, using our oss-performance benchmarking tool.

Summary

During lockdown we achieved a 19.4% RPS improvement for MediaWiki workloads, and a 1.8% RPS improvement for Wordpress. We demonstrated that HHVM is 55.5% faster than PHP 7 on a MediaWiki workload, 18.7% faster on a Wordpress workload, and 10.2% faster on a Drupal 7 workload. Improvements made to HHVM to better serve open source frameworks will ship with the next release. As a part of our lockdown effort a patch showing a promising performance improvement for all PHP engines was submitted to MediaWiki. The raw data, configuration settings, and summary statistics are available here.

Lockdowns are always a great opportunity to get the whole community involved, and we’re thrilled with the participation this time around. Our #hhvm and #hhvm-dev channels on Freenode were quite active and a number of contributors even chimed in on GitHub issues. Special thanks to Christian Sieber for contributing support for Drupal 8 to our benchmarking tool.

Throughout the lockdown we have made improvements to our benchmarking tools, and optimized our configuration. We’re pleased to report that these frameworks all ran successfully using the high performance RepoAuthoritative compilation mode, and file-cache we deploy with at Facebook. New tooling will make it easier to take advantage of these powerful tools in the upcoming 3.8 release.

Methodology

While no benchmark will ever perfectly capture the performance profiles of the disparate live sites it seeks to approximate, great care was taken in constructing this tool. We feel that it’s important to carefully explain the decisions we’ve made and the effects they’ve had on performance. Hopefully these notes will shed light on the numbers that we’ve shared, and help others to configure their benchmarking suites or to optimize their sites. Inquiries regarding the benchmarking methodology can be made directly on our oss-performance repository, pull-requests for new features and fixes are also most welcome.

Our hardware setup featured Mac Pro computers running a minimal installation of Ubuntu 14.04 (no virtualization or other layers which would add uncontrolled variables). We chose the Mac Pro because, while being convenient to work with, they also contain components that are representative of hosting servers, including Xeon processors, an abundance of RAM, and flash storage. They are also readily available in an identical configuration (see notes for details). The configuration offered ample RAM to avoid the possibility of swapping, and an SSD to reduce the effect of latency reading from disk. The minimalistic software installation ensured that results were consistent between runs and that benchmarks were run in near isolation.

For HHVM, we choose to run in RepoAuthoritative mode, using the file cache to construct a virtual in-memory file system, and with the proxygen webserver. This setup closely mirrors the configuration we use to run Facebook, and has been highly optimized. Traffic was proxied through an nginx webserver to allow for a fair comparison with PHP running FastCGI through nginx. We chose a high thread-count, as many frameworks were I/O-bound. For a CPU-bound application, we generally recommend a thread-count no higher than twice the number of available cores.

Our PHP 5 and 7 setups were quite similar; we enabled the opcache, and chose settings tuned for performance. For all engines tested, error reporting and logging were disabled to limit log spew and maximize performance. In production we generally favor a sampling approach that allows for data to still be collected about warnings and notices. For benchmarking, we felt no logging was most appropriate.

The benchmarking tool performs a sanity check once the engine and webserver have started accepting traffic to ensure that the framework is sending reasonable responses on the URLs being benchmarked. We also collect a count of the various HTTP response codes, average bytes received, and failed requests. This data is manually inspected after data collection is complete to ensure that the results from the server are reasonable. Note that some of the URLs benchmarked return non-200 response codes to approximate a realistic distribution of requests to a live server. For all results presented here this sanity check information is available in the raw JSON data provided in the notes.

Each framework we benchmarked was configured with a sample dataset designed to approximate an average installation. For Wordpress and Drupal 7, bundled tools were used to construct demo sites for benchmarking. MediaWiki was benchmarked using the Barack Obama page from Wikipedia, as was recommended by an engineer from Wikimedia foundation as representative of their load. For Wordpress, the URLs queried were based on data extracted from the hhvm.com access logs. Drupal 7 query URLs mirrored similar access patterns to Wordpress as access logs from live sites were not readily available. The MediaWiki URL list was generated to stress the Barack Obama page.

Drupal 8 was benchmarked in a manner similar to Drupal 7, including the use of bundled tooling to generate the sample site. A consensus has not developed as to whether the page cache should be used when measuring Drupal 8 performance so for our benchmarking suite we support cached and uncached Drupal 8 as separate targets. An additional setup step was performed to pre-populate Drupal 8 Twig templates so that benchmarking could be performed in RepoAuthoritative mode. This is an ahead of time optimization any sufficiently large deployment of this framework would be likely to benefit from. For both versions of Drupal a small number of additional tweaks were carried out to make the sample data more realistic. The details of these changes as well as details about the configurations of the various other frameworks are available on our oss-performance repository.

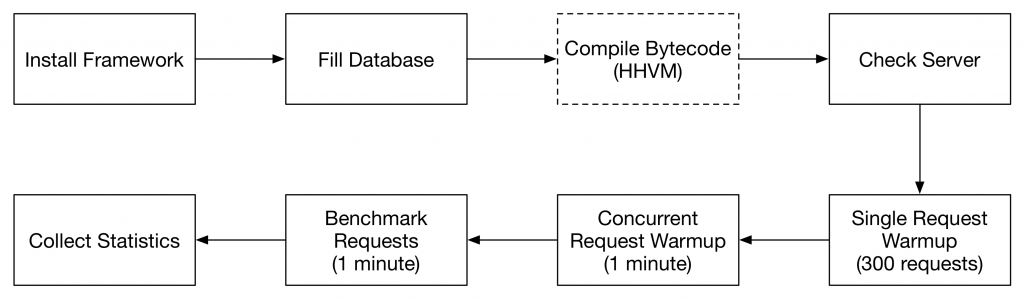

When running each engine, we sent both a set of single threaded and a set of concurrent warmup requests to each site before we began measuring performance data. We did this to allow hardware caches to warm up, PHP’s opcache to fill, and HHVM’s JIT compilation to complete. The number and duration of these warmup periods was chosen by examining performance profiles to look for steady state and monitoring the size and growth of the HHVM translation cache to ensure that compilation was largely completed. As we decided to run HHVM in RepoAuthoritative mode, a setup step was also included to build and optimize the bytecode repository using whole-program analysis, and construct the static content portion of the file-cache for efficient in-memory file system access.

Benchmarking requests were sent from 200 concurrent users, using the siege benchmarking tool. A high number of concurrent users best approximated the maximum possible RPS of a server under high load. Ideally concurrency should be the highest number of users possible before requests began to queue, as this was not possible with siege a high number was selected to ensure reliable RPS data. Note that as a consequence of this decision the response time metric now includes time spent queued. A realistic load balancer would prevent such queueing from occurring on machines serving live traffic.

We discovered that, by default, MediaWiki will store a view counter in MySQL, as well as a cache of each translatable string on any given page. Any large-scale deployment of MediaWiki would need to disable these options; so we’ve specifically turned them off. We’ve confirmed that these settings are also disabled in production for Wikipedia. A patch has been submitted to MediaWiki to cache translations more efficiently. The view counters have been deprecated, and will likely be removed entirely in a future release. These particular inefficiencies were discovered by analyzing the queries sent to MySQL by MediaWiki during a single request.

In our initial testing with Drupal 7, we noticed that every request was triggering a scan of the Drupal document root, making the is_dir(), opendir(), and readdir() functions incredibly hot. It has since been brought to our attention that a known bug in Drupal was causing the extension used to generate the sample data to be treated as missing, thus triggering a complete filesystem scan for each request. Currently the only fix for this issue is to manually remove references to the uninstalled extension from the database. After patching the database time spent accessing the filesystem drops substantially and the resulting site behaves identically to a fresh installation of Drupal 7 with manually entered data.

Results

Our results compare pre- and post-lockdown HHVM, and separately PHP 5, 7, and post-lockdown HHVM. We used PHP 5.6.9, and commits from PHP, and HHVM master (see notes below). For the pre- and post-lockdown comparison stable releases of MediaWiki, Drupal 7, and Wordpress were used, post-lockdown numbers include a patch to MediaWiki that was written during the lockdown. The second set of comparisons uses the same stable releases, with the MediaWiki patch applied for all engines. Data for each engine, framework pair was collected in ten independent runs, and RPS was quantified.

We observed low variability between runs and have a great deal of confidence in the reproducibility of these results. The raw output of each run in JSON form has been made available in the notes below along with the batch run settings used to configure the benchmark tool. The canonical field in the output indicates whether non-standard options were passed during the test run. The only non-standard option passed during our test runs was to apply the MediaWiki patch. All passed options are available in the configuration JSON.

In the lockdown, we were able to improve MediaWiki performance by 19.4%, and Wordpress by 1.8%. Unfortunately, Drupal wins were no longer measurable once the Drupal database was patched to fix the aforementioned plugin bug. In addition, we increased RPS for simple pages by 5.2% (this was mostly a measure of the overhead incurred by a request). The lockdown offered an opportunity to evaluate our methodology, and work with the community on realistic configurations for the sample data used in our benchmarking. A number important changes to the benchmarking tools and framework configurations were made throughout the course of the lockdown. The results of the lockdown are summarized below, normalized to pre-lockdown RPS numbers. Drupal 8 was not part of our lockdown and is therefore not measured here.

Across the board we found that HHVM performs best on applications where CPU time is maximized. In particular we’re 1.55 times faster than PHP 7 on a MediaWiki workload, 1.1 times faster on a Drupal 7 workload, and 1.19 times faster on a Wordpress workload. As none of these frameworks take advantage of the asynchronous I/O architecture available in HHVM (i.e., async), it’s not surprising that the greatest performance benefits come from the efficient execution of PHP code possible with a JIT compiler. The following figure summarizes the performance difference between PHP 5, PHP 7, and HHVM. Results were normalized to PHP 5 RPS, and Drupal 8 has been included. We benchmarked Drupal 8 with caching both enabled and disabled. In general the results for Drupal 8 were more stable with the cache disabled.

For all benchmarks we performed ten independent runs and used the mean RPS result. We also measured standard deviation (see error bars). Standard deviation was highly consistent between runs, and we’re confident that with the proper configuration and hardware these results should be easy to reproduce.

As an exercise, we evaluated the benefits of async MySQL in the Wordpress environment. By modifying portions of Wordpress to take advantage of the async capabilities offered by Hack and HHVM, we were able to examine the potential for performance gains through async execution. In our test environment we separated the MySQL and PHP hosting to separate machines within the same datacenter to approximate a realistic Wordpress stack. The introduction of asynchronous query execution can demonstrate performance gains in both RPS and response time. We’ll be writing separately about this in the near future.

Lockdown Optimizations

By sharing the details of some select successes and failures from lockdown we’d like to provide a window into the work we’ve been doing and offer a guide to anyone considering working on performance related patches for HHVM. Lockdown issues are available on the HHVM GitHub repository and have been tagged with “lockdown.” They include our running commentary and notes about our findings as we implemented and measured them.

The issues we focused on fell broadly into several categories: builtin functions, extensions, function dispatch, memory model, JIT compilation, and framework specific patches. In addition to exploring these optimizations we also experimented with introducing async into Wordpress to measure its benefit.

The optimizations to builtin functions included get_object_vars() (#5287), implode() (#5289), function_exists() (#5288), and defined() (#5290). The first two optimizations were quite fruitful for both Wordpress and MediaWiki. We had previously predicted that function_exists() and defined() would be good targets for Drupal 7. Unfortunately defined() was not hot enough to have a measurable performance effect, and function_exists() was primarily called with non-static strings making it difficult to optimize.

We also looked at optimizing string concatenation in the JIT using a specialized bytecode for multi-string concatenations (#5304). Although this is a valuable optimization we were unable to measure a performance benefit in any of these frameworks. The majority of multi-string concatenations were in places that retained a reference to the internally joined strings, this prevented an important feature of the optimization allowing reuse of consumed string buffers. A further potential JIT optimization was object destruction (#5281). Experimentation led us to the conclusion that it would not be worthwhile to explore changes to the object destruction path. Building a non-refcounted garbage-collector is an ongoing HHVM project and may offer more substantial performance benefits in this area.

Several important enhancements to builtin function dispatch were made (#5276, #5277) and several more have been planned (#5267, #5292). The optimizations now completed have allowed for faster inline dispatch to functions with variadics and improved support for static method dispatch for builtin classes. These changes have already showed measurable performance gains in Wordpress, and we expect a tangible benefit from the remaining patches once they are ready to be merged.

Currently we build using libpcre despite the availability of libpcre2. We decided to see if this new pcre library was any more performant (#5302). Unfortunately the results here were not encouraging. Further discussions with the maintainers of libpcre2 have led us to conclude that the changes were largely surrounding the API and that optimizing performance was not a goal. In the future we may explore the optional use of a different regular expression processing library for compatible expressions (though to remain feature complete we will always need to provide libpcre as a fallback).

Profiling has demonstrated that dynamic property access is a hot code-path for Wordpress, therefore we worked to optimize dynamic properties and object cloning for objects with such properties (#5285, #5286, #5287). In HHVM dynamic properties are stored in arrays, which had previously been eagerly copied during calls to clone() and get_object_vars(). By allowing copy-on-write behavior for these property arrays, similar to the behavior of regular PHP arrays, we measured performance improvements in Wordpress.

Some of our largest wins have come from examining the frameworks themselves. In particular, reconfiguring MediaWiki to move translatable string caching out of MySQL showed a 14% performance win using HHVM and a 21% win when using PHP 7. We created an alternative implementation that improved RPS by an additional 22% and 5% respectively - bringing the total improvement for HHVM and PHP 7 to 39% and 33%. We’re happy to be able to contribute back to another open source project and are working to upstream these changes.

There are some notable wins that disappeared as we tuned our benchmarking methodology and patched the frameworks themselves. For MediaWiki optimizing str_replace() was a major win, but the hot-path for str_replace() was elided in the subsequent patch to fix localization caching. Likewise optimizations to the file cache showed a great deal of promise for Drupal 7 (#5284) until it was discovered that the document tree scanning behavior of Drupal was a bug, which we were able to fix with a database change.

Work continues on a number of these changes (#5264, #5269, #5270, #5299), and others have been completed post-lockdown (#5300, #5279). Of the notable post-lockdown completions, work to speedup memcpy showed major performance gains for our internal workload.

We’re all quite thrilled with the progress made during the lockdown and the lessons learned. In particular, we feel that we’ve been able to validate the importance of both JIT compilation and asynchronous execution for optimizing PHP performance. From Amdahl’s law, we know that any attempt to optimize execution efficiency of PHP will be undercut by I/O-bound applications. Async offers us the opportunity to shift latency back to the CPU by improving I/O performance, increasing the importance of efficient PHP execution through JIT compilation. By combining async and JIT compilation, we’re able to run applications such as Facebook at scale.

Technical Notes

Frameworks used — Collected statistics came from stable releases and betas of several popular frameworks. For Drupal we measured 7.31 and 8.0.0-beta11. For MediaWiki and Wordpress, version 1.24.0 and 4.2.0 were used. Data for Wordpress was generated using the demo-data-creator version 1.3.2. The patch developed for MediaWiki and used in cross-engine comparisons and with post-lockdown HHVM is available on the oss-performence repository. Update: this has now been merged into Mediawiki.

Hardware details - Benchmark statistics were collected on a late 2013 Mac Pro (MD878LL/A). The machine features a 6-core 3.5 GHz Xeon processor with 64 GB of RAM and a 512 GB SSD.

Siege - When benchmarking frameworks we used Siege 2.78, currently all versions of Siege 3 have known problems. In particular Siege 3.0.0 to 3.0.7 send incorrect HOST headers to ports other than 80, and 443, and Siege 3.0.8 and 3.0.9 occasionally send bad paths due to incorrect redirect handling.

Nginx - All requests were proxied through nginx, this was required as Siege is unable to talk directly to a FastCGI server. We used nginx 1.4.6. The configuration settings for nginx are available in the oss-performance repository.

Engines Benchmarked - To compare pre- and post- lockdown performance of HHVM we measured a build of HHVM master from May 11th and May 22nd. For PHP/HHVM comparisons post-lockdown HHVM, PHP stable release 5.6.9, and PHP 7 master from May 22nd were used.

Result Details - For all data used here we’ve made the raw JSON output of each run of the oss-performance tool available and the settings we used, we strongly recommend that anyone wishing to release results from this tool make this diagnostic data available. We are also making available the summary statistics computed across runs.

Response Time - We haven’t included response times in this report as lockdown optimizations focused on RPS. Response time is a more important metric for many smaller sites and the numbers we collected are available in the raw data. We hope to make improvements to the benchmarking tool to generate concurrency/response time and concurrency/RPS curves.

Sugar CRM - There has been some interest in the SugarCRM performance of the various PHP engines. We were unable to provide these numbers as could not find a recent version of PHP 7 that could execute SugarCRM without encountering a segmentation fault. Hopefully it will be possible to collect these numbers in the future as PHP 7 becomes more mature.

Comments

- RobW: Can you link to the drupal bug about searching the file system that you fixed with a db change?

- Tim Siebels: Great article! I really like that you explained what setup was used in detail. Reading the issue's comments during lockdown was fun, as you could see progress. Furthermore you included performance gains for PHP which shows that this isn't a fight with vanilla PHP but is a healthy competition. :) Keep getting more open, I like it! :)

- Fred Emmott: Thanks :) For reference (sorry we forgot to include it in the post) the MediaWiki issue with full results is here: https://phabricator.wikimedia.org/T99740

- Fred Emmott: Sorry, this was from an external contributor and we don't have the source handy. We've asked for details and will get back to you :)

- Joel Marcey: Thanks Tim!

- Kazanir: What happened here was that devel_generate was used to generate the content in the database dump, but the module wasn't actually disabled or uninstalled. Then the database dump was used to drive a codebase that lacked that module. This results in a "zombie" entry in Drupal's system table where a module is recorded as enabled despite not existing. (This is a known issue on the Drupal side.) This results in each page request seeing the "missing" module and scanning the entire filesystem below modules/ to try to find it on every request. Oops.

- Esa: You are leading the whole php community. Mark should be proud of you. You deserve the whole php community comes and welcome you due to your achievements.

- mikeytown2: There are a couple of async MySQL Drupal modules out there, but they are using mysqlng & mysqli & MYSQLI_ASYNC instead of HHVM async as it wasn't available when the code was developed. See https://www.drupal.org/project/apdqc & https://www.drupal.org/sandbox/gielfeldt/2422171

- mikeytown2: I believe this is the issue - https://www.drupal.org/node/1081266

- Meserlian: This is awesome testing. Looking forward for the Async test for WP.

- Nemo: Thanks for your testing. From several things mentioned, it seems you tested an older release of MediaWiki (1.24?) with vanilla settings. For higher realism, it would probably have been more useful to test the settings commonly used by any "serious" MediaWiki wiki, see https://www.mediawiki.org/wiki/Manual:Performance_tuning for the basics.

- Radu Murzea: I really love the incredible amount of detail in this post and, obviously, the impressive results of your improvements. I'm really curious to see how these tweaks affect performance of a much broader collection of PHP frameworks and CMS-es.

- Peter: Awesome, I really fell in love with HHVM over the last couple of months. I can't wait for 3.8.

- Fred Emmott: We're on a slightly older version as: - it's only one minor version behind - we're not aware of significant performance changes - if there are any, we would expect them to mostly benefit HHVM anyway, as that's the engine that Wikipedia have recently switched to - updating releases has a high cost: as well as the basic mechanics, we need to make sure the results are still consistent, re-profile to make sure there's not been any changes in the defaults that are good for 'just works' but aren't realistic (the documentation is not good enough for this), etc It is not vanilla: - we use a custom file-based localization cache (which is an improvement over Mediawiki's file cache and MySQL caches), which is an improvement on PHP5, PHP7, and HHVM: http://cl.ly/image/3I2m2a190G3N - we disable view counters In general, we aim to optimize and reconfigure by profiling and looking for bottlenecks, rather than trusting often-incorrect or outdated documentation. The Mediawiki docs were good though, so to address them: - opcode caching is enabled by default in all recent versions of PHP, and irrelevant in HHVM. Our benchmarking script makes sure it is enabled when running PHP5 or PHP7 - memcached is not used both because object caching does not show up as significant in profiles, and because there is not yet a sufficiently complete and stable implementation for PHP7 - output caching is not used as this would be nearly a no-op with an HTTP cache in place, and roughly turn the benchmark into 'Hello, world'. The uncached Barack Obama page is representative of the load on Wikipedia's application servers - HTTP caching: again, turns it into 'hello, world'. While this does mean that our numbers are not representative of the RPS you'd get on the whole system, presumably if you're looking at PHP performance, performance of the application servers are what you want to focus on - mbstring is enabled in PHP5, PHP7, and HHVM - FastStringSearch is not used because it is not available for PHP7, disabling like-for-like comparisons - the MySQL lock options do not have an affect, and should not be particularly relevant as our benchmark does not include writes - We have plenty of RAM for MySQL, $PHP_IMPLEMENTATION, and nginx.

- HHVM Demonstrated to be 18.7% Faster Than PHP 7 on a WordPress Workload: […] week HHVM developers shared the results of their first ever open source performance lockdown. HHVM is Facebook’s open source PHP execution engine, originally created to help make its […]

- Guilherme Cardoso: Sorry for asking this here. ATM attributes are available at class level and methods. Is it in the roadmap to include them as well on properties or is something impossible thanks to PHP dynamic nature? When looking for web frameworks features, implementing attributes in properties allow us to define most common configs (entities mapping, validations mappings, etc) in a good way, similar to C# attributes features. ATM i'm doing it in properties getters which is ugly of course.

- Nemo: Thanks for the clarifications, in particular on localisation cache; it was not clear to me what you meant by «we’ve specifically turned them off» before reading https://github.com/hhvm/oss-performance/blob/master/README.md#mediawiki . I still wonder about the effect of some other standard configurations you may have missed, like $wgJobRunRate.

- HHVM Performance Lockdown: Is HHVM Reaching Its Limit?: […] latest HHVM blogpost is all about getting performance gains from HHVM in real-life situations. With a noticeable increase for MediaWiki, all other frameworks are […]

- L’hebdo de l’écosystème WordPress #22: […] vous suivez la guerre que se livre PHP et HHVM pour optimiser les performances de leur interpréteur PHP respect…, hhvm a publié cette semaine leurs dernières […]

- Josh Watzman: No fundamental reason why we haven't allowed it, just haven't gotten around to building it :) I just filed https://github.com/facebook/hhvm/issues/5493 for you. In the future, GitHub issues or IRC are, respectively, better ways of filing feature requests or asking questions than a comment on a random blog post :)

- Jani Tarvainen: I strapped the HTTP/2 capable H2O web server to HHVM to go ahead and make it serve like they do in 2020 :) https://www.symfony.fi/entry/serving-php-on-http-2-with-h2o-and-hhvm-symfony-wordpress-drupal

- wwbmmm: You had optimized for RepoAuthoritative mode with proxygen server. What about non RepoAuthoritative mode and fastcgi server? This is more popular use case (except facebook) and is more compatible with zend php.

- Paul Bissonnette: The numbers are based on proxygen running behind nginx, we found that it showed a small performance bump when compared to fastcgi behind nginx, but the difference was very small. We have in the past published numbers based on non-RepoAuthoratative mode, and will continue to track and improve its performance. For a variety of reasons running in RepoAuthoratative mode will always be a substantial performance benefit. Put simply the use of whole program analysis allows a host of powerful optimizations that depend on the knowledge that all execution paths are both known and stable. It is worth noting that even in non-RepoAuthoratative mode we are quite performant, and the performance benefit from this mode is probably only going to be important to those operating at a sufficiently large scale. We are aware of other large deployments that use or are exploring the future use of this setting. We've also added tooling to make it easier to experiment with RepoAuthoritative mode (mentioned in the article). Obviously there is no one ideal configuration that will work for every installation, but we feel strongly that the configuration presented here is a good choice for large sites that deeply care about this kind of performance. The article was already complicated enough with one set of configuration options so we chose the ones that would matter most to anyone operating at scale.

- wwbmmm: Are you going to do some optimization for non-RepoAuthoratative mode? My profiling shows that ExecutionContext::lookupPhpFile cost 11% inclusive cpu in non-RepoAuthoratative mode. Those who says "php7 is faster than hhvm" only compare php7 with hhvm-no-repo.

- Paul Bissonnette: We're committed to improving both modes, though at the end of the day the metric we watch most carefully is repo-mode performance. We've done a fair bit of profiling in non-repo mode and have made some important improvements. I've never seen lookupPhpFile in any of the profiles I've examined, and the function doesn't appear to be present in master, 3.3, 3.6, or 3.7 (are you benchmarking an old version of hhvm?). I'd be curious to hear more details about how you're performing this profiling and I'd be happy to work with the data to make improvements or review pull-requests. There's been a lot of confusion about how to benchmark PHP engines in a fashion that's representative of a production environment. A good portion of this article has been about trying to formalize some of the lessons we learned. The hope is that we might provide a sane hhvm configuration as well as a framework for simulating a production environment for others who wish to run their own benchmarks. I've seen some of the numbers being passed around but so little has been shared of the methodology that I can't really comment on the accuracy of those results.

- wwbmmm: I have profiled in 3.0/3.6, 3.6 is slightly faster. Do you have email address? I can send you more details.

- Paul Bissonnette: Sure, paulbiss (at) fb.com, you can also find me on our Freenode channels #hhvm and #hhvm-dev (I'm usually around during normal business hours in California). Thanks!

- HHVM Now Faster than PHP 7 | Hackers Media: […] to the report, various setups were tested, with technologies like WordPress, Drupal, and MediaWiki used in a […]

- Craig Carnell: Where are the Magento results? :)

- Fred Emmott: Magneto1: HHVM 3.7: 119.73 RPS HHVM 3.8: 124.47 RPS Improvement: 4% Magento2: pull requests welcome ;) https://github.com/hhvm/oss-performance

- Fred Emmott: We didn't include them in the post as Magento wasn't part of the lockdown.